Portfolio

I am a seasoned mechanical engineer with over five years of design and innovation within Apple’s Image and Sensing Incubation group. My role covers the entire lifecycle of technology development and human interaction exploration across multiple product categories: phones, portable computers, home, AR/VR, and health. At the core of my work lies the art of translating ideas and requirements into reality, where I specialize in driving architecture, product design, and orchestrating cross-functional collaborations. I am deeply specialized in creating robust data collection devices to enhance computer vision algorithms. I enable the realization of cutting-edge technology and interactions, from proof-of-concept prototypes to full form factor live on systems. As you explore my portfolio, you'll see my commitment to excellence in mechanical product design, innovation, and improving human interaction.

Patents

-

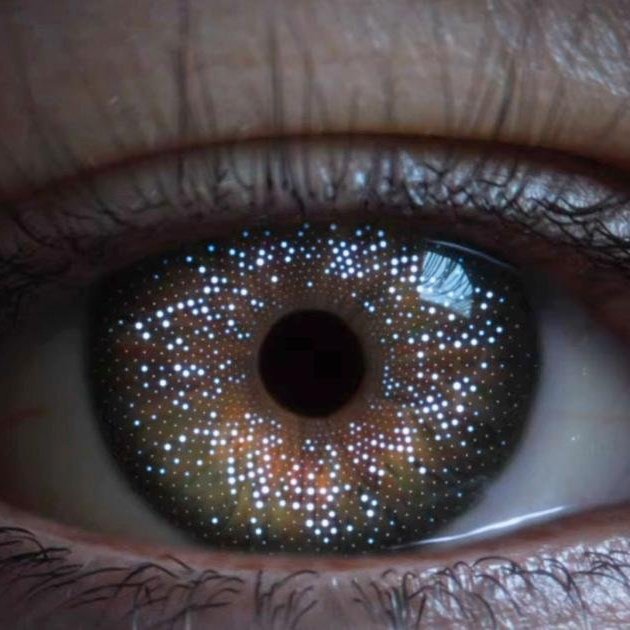

An electronic device with a translucent layer and an opaque layer containing extremely small holes that are invisible to the user. It uses a light source to send light through the holes into the user's body and a light receiver to detect the light that bounces back. This information helps determine various body-related data, like heart rate, respiration rate, blood oxygen level, and more. The device can be used for health monitoring and is designed to save power when not in use. It can detect a user's body proximity and switch modes accordingly to optimize power consumption.

-

A ring input device, and more particularly to pressure-sensitive input mechanisms within the ring input device that detect pressure to initiate an operation, is disclosed. Because finger rings are often small and routinely worn, electronic finger rings can be employed as unobtrusive communication devices that are readily available to communicate wirelessly with other devices capable of receiving those communications. Ring input devices according to examples of the disclosure can detect press inputs on its band to generate inputs that can then be wirelessly communicated to companion devices.Item description

-

A system may include an electronic device and one or more finger devices. The electronic device may have a display and the user may provide finger input to the finger device to control the display. The finger input may include pinching, tapping, rotating, swiping, pressing, and/or other finger gestures that are detected using sensors in the finger device. The sensor data related to finger movement may be combined with user gaze information to control items on the display. The user may turn any surface or region of space into an input region by first defining boundaries of the input region using the finger device. Other finger gestures such as pinching and pulling, pinching and rotating, swiping, and tapping may be used to navigate a menu on a display, to scroll through a document, to manipulate computer-aided designs, and to provide other input to a display.

-

An electronic device includes a translucent layer that forms a portion of an exterior of the electronic device, an opaque material positioned on the translucent layer that defines micro-perforations, and a processing unit operable to determine information about a user via the translucent layer. The processing unit may be operable to determine the information by transmitting optical energy through a first set of the micro-perforations into a body part of the user, receiving a reflected portion of the optical energy from the body part of the user through a second set of the micro-perforations, and analyzing the reflected portion of the optical energy.

-

Embodiments are directed to an electronic device having an illuminated body that defines a virtual or dynamic trackpad. The electronic device includes a translucent layer defining a keyboard region and a dynamic input region along an external surface. A keyboard may be. positioned within the keyboard region and including a key surface and a switch element (e.g., to detect a keypress). A light control layer positioned below the translucent layer and within the dynamic input region may have a group of illuminable features.

-

Embodiments are directed to an electronic device having a hidden or concealable input region. In one aspect, an embodiment includes an enclosure having a wall that defines an input region having an array of microperforations. A light source may be positioned within a volume defined by the enclosure and configured to propagate light through the array of microperforations. A sensing element may be coupled with the wall and configured to detect input received within the input region. The array of microperforations are configured to be visually imperceptible when not illuminated by the light source. When illuminated by the light source, the array of microperforations may display a symbol.